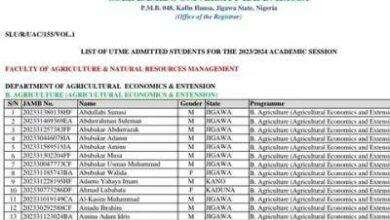

Spak Past Questions in PDF Format

Spak Past Questions in PDF Format. As a Nigerian student in SSS2, your chances of passing or being named one of the champions in the Interswitch SPAK National Science Competition in 2021 are heavily reliant on your ability to study past questions and answer guides. For all those who are preparing for the Interswitch SPAK 3.0 National Science Competition in 2021, you will get to find out how to download the SPAK past questions and answers below. Why this is important is that it will help you prepare to utilize our Spak Past Questions and Answers before your exam. Additionally, reviewing previous questions and becoming familiar with the solutions will be extremely beneficial to you throughout the competition. Pounds to Naira

👉 Relocate to Canada Today!

Live, Study and Work in Canada. No Payment is Required! Hurry Now click here to Apply >> Immigrate to CanadaWhat is Spak Past Questions in PDF

It is a yearly search across Nigerian high schools for only SS2 pupils between the ages of 14 and 17 who are between 14 and 17. In addition, it is offered to help students chart their desired career path and motivate them to achieve their maximum potential by maximizing their abilities.

The Interswitch SPAK Competition first took place in 2017. Additionally, it was once known as the SPAK Competition alone. Interswitch, on the other hand, surpassed the competition in 2018. As a result, it has been renamed InterswichSPAK (ISPAK).

Furthermore, the Competition got off to a good start with Interswitch SPAK 1.0, which took place in 2018, and Interswitch SPAK 2.0, which took place in 2019. SPAK Past Questions And Answers in PDF Format

All those who have bought our past questions have been giving testimonies of how it helped them. We are very sure it is going to help you as well. When you get this past question it will definitely help you to score higher than your competitors and give you an edge over them. Pounds to Naira

With the proper use of our original Spak Past Questions in PDF Format, you will definitely pass the OOU post utme screening in coming out with flying colors smiling. This Spak Past Questions in PDF Format comes to you through your email address or Whatsapp once you make payments to purchase it. The downloadable format is going to be in softcopy, you can either choose to download it and read with your android phone or laptop and at your own convenience – wherever and whenever.

How is Spak Past Questions in PDF Patterned

Normally, the Spak Past Questions in PDF Format are in a theory question pattern. We have made it very easy for you. we bring all the questions for many years and put them together but we indicate the specific years of their occurrence. We provide the correct answers in order to save your time. All you need to do is to devote quality time to study the Spak Past Questions in PDF Format and watch yourself change the narrative by scoring better than you expected.

Why You Need A Copy of Spak Past Questions in PDF Download

You need this past question for the following under listed reasons;

- It will expose you to the questions that have been asked years back as most of those questions and answers get repeated every year while some will just be rephrased. So if you don’t have a copy of this past question, then you are losing out.

- It, however, provides useful information about the types of questions to expect and helps you prevent unpleasant surprises.

- You will be able to detect and answer repeated questions quickly and easily.

- You will be able to find out the number of questions you will see in the exams.

Spak Past Questions in PDF Sample

In other to know that we are giving out the original Spak Past Questions in PDF Format, we have decided to give you some free samples as proof of what we said earlier. Jamb Result

👉 Relocate to Canada Today!

Live, Study and Work in Canada. No Payment is Required! Hurry Now click here to Apply >> Immigrate to Canada1. How is Apache Spark different from MapReduce?

| Apache Spark | MapReduce |

| Spark processes data in batches as well as in real-time | MapReduce processes data in batches only |

| Spark runs almost 100 times faster than Hadoop MapReduce | Hadoop MapReduce is slower when it comes to large scale data processing |

| Spark stores data in the RAM i.e. in-memory. So, it is easier to retrieve it | Hadoop MapReduce data is stored in HDFS and hence takes a long time to retrieve the data |

| Spark provides caching and in-memory data storage | Hadoop is highly disk-dependent |

2. What are the important components of the Spark ecosystem?

Apache Spark has 3 main categories that comprise its ecosystem. Those are:

- Language support: Spark can integrate with different languages to applications and perform analytics. These languages are Java, Python, Scala, and R.

- Core Components: Spark supports 5 main core components. There are Spark Core, Spark SQL, Spark Streaming, Spark MLlib, and GraphX.

- Cluster Management: Spark can be run in 3 environments. Those are the Standalone cluster, Apache Mesos, and YARN.

3. Explain how Spark runs applications with the help of its architecture.

This is one of the most frequently asked spark interview questions, and the interviewer will expect you to give a thorough answer to it.

Spark applications run as independent processes that are coordinated by the SparkSession object in the driver program. The resource manager or cluster manager assigns tasks to the worker nodes with one task per partition. Iterative algorithms apply operations repeatedly to the data so they can benefit from caching datasets across iterations. A task applies its unit of work to the dataset in its partition and outputs a new partition dataset. Finally, the results are sent back to the driver application or can be saved to the disk.

4. What are the different cluster managers available in Apache Spark?

- Standalone Mode: By default, applications submitted to the standalone mode cluster will run in FIFO order, and each application will try to use all available nodes. You can launch a standalone cluster either manually, by starting a master and workers by hand or use our provided launch scripts. It is also possible to run these daemons on a single machine for testing.

- Apache Mesos: Apache Mesos is an open-source project to manage computer clusters, and can also run Hadoop applications. The advantages of deploying Spark with Mesos include dynamic partitioning between Spark and other frameworks as well as scalable partitioning between multiple instances of Spark.

- Hadoop YARN: Apache YARN is the cluster resource manager of Hadoop 2. Spark can be run on YARN as well.

- Kubernetes: Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications.

5. What is the significance of Resilient Distributed Datasets in Spark?

Resilient Distributed Datasets are the fundamental data structure of Apache Spark. It is embedded in Spark Core. RDDs are immutable, fault-tolerant, distributed collections of objects that can be operated on in parallel.RDD’s are split into partitions and can be executed on different nodes of a cluster.

RDDs are created by either transformation of existing RDDs or by loading an external dataset from stable storage like HDFS or HBase.

Here is how the architecture of RDD looks like:

So far, if you have any doubts regarding the apache spark interview questions and answers, please comment below.

6. What is a lazy evaluation in Spark?

When Spark operates on any dataset, it remembers the instructions. When a transformation such as a map() is called on an RDD, the operation is not performed instantly. Transformations in Spark are not evaluated until you perform an action, which aids in optimizing the overall data processing workflow, known as lazy evaluation.

7. What makes Spark good at low latency workloads like graph processing and Machine Learning?

Apache Spark stores data in memory for faster processing and building machine learning models. Machine Learning algorithms require multiple iterations and different conceptual steps to create an optimal model. Graph algorithms traverse through all the nodes and edges to generate a graph. These low latency workloads that need multiple iterations can lead to increased performance.

8. How can you trigger automatic clean-ups in Spark to handle accumulated metadata?

To trigger the clean-ups, you need to set the parameter spark.cleaner.ttlx.

9. How can you connect Spark to Apache Mesos?

There are a total of 4 steps that can help you connect Spark to Apache Mesos.

- Configure the Spark Driver program to connect with Apache Mesos

- Put the Spark binary package in a location accessible by Mesos

- Install Spark in the same location as that of the Apache Mesos

- Configure the spark.mesos.executor.home property for pointing to the location where Spark is installed

10. What is a Parquet file and what are its advantages?

Parquet is a columnar format that is supported by several data processing systems. With the Parquet file, Spark can perform both read and write operations.

Some of the advantages of having a Parquet file are:

- It enables you to fetch specific columns for access.

- It consumes less space

- It follows the type-specific encoding

- It supports limited I/O operations

11. What is shuffling in Spark? When does it occur?

Shuffling is the process of redistributing data across partitions that may lead to data movement across the executors. The shuffle operation is implemented differently in Spark compared to Hadoop.

Shuffling has 2 important compression parameters:

spark. shuffle.compress – checks whether the engine would compress shuffle outputs or not spark.shuffle.spill.compress – decides whether to compress intermediate shuffle spill files or not

It occurs while joining two tables or while performing byKey operations such as GroupByKey or ReduceByKey

14. What are the various functionalities supported by Spark Core?

Spark Core is the engine for parallel and distributed processing of large data sets. The various functionalities supported by Spark Core include:

- Scheduling and monitoring jobs

- Memory management

- Fault recovery

- Task dispatching

15. How do you convert a Spark RDD into a DataFrame?

There are 2 ways to convert a Spark RDD into a DataFrame:

- Using the helper function – toDF

import com.mapr.db.spark.sql._

val df = sc.loadFromMapRDB(<table-name>)

.where(field(“first_name”) === “Peter”)

.select(“_id”, “first_name”).toDF()

- Using SparkSession.createDataFrame

You can convert an RDD[Row] to a DataFrame by

calling createDataFrame on a SparkSession object

def createDataFrame(RDD, schema:StructType)

16. Explain the types of operations supported by RDDs.

RDDs support 2 types of operation:

Transformations: Transformations are operations that are performed on an RDD to create a new RDD containing the results (Example: map, filter, join, union)

Actions: Actions are operations that return a value after running a computation on an RDD (Example: reduce, first, count)

17. What is a Lineage Graph?

This is another frequently asked spark interview question. A Lineage Graph is a dependencies graph between the existing RDD and the new RDD. It means that all the dependencies between the RDD will be recorded in a graph, rather than the original data.

The need for an RDD lineage graph happens when we want to compute a new RDD or if we want to recover the lost data from the lost persisted RDD. Spark does not support data replication in memory. So, if any data is lost, it can be rebuilt using RDD lineage. It is also called an RDD operator graph or RDD dependency graph.

18. What do you understand about DStreams in Spark?

Discretized Streams is the basic abstraction provided by Spark Streaming.

It represents a continuous stream of data that is either in the form of an input source or processed data stream generated by transforming the input stream.

19. Explain Caching in Spark Streaming.

Caching also known as Persistence is an optimization technique for Spark computations. Similar to RDDs, DStreams also allow developers to persist the stream’s data in memory. That is, using the persist() method on a DStream will automatically persist every RDD of that DStream in memory. It helps to save interim partial results so they can be reused in subsequent stages.

The default persistence level is set to replicate the data to two nodes for fault tolerance, and for input streams that receive data over the network.

20. What is the need for broadcast variables in Spark?

Broadcast variables allow the programmer to keep a read-only variable cached on each machine rather than shipping a copy of it with tasks. They can be used to give every node a copy of a large input dataset in an efficient manner. Spark distributes broadcast variables using efficient broadcast algorithms to reduce communication costs.

scala> val broadcastVar = sc.broadcast(Array(1, 2, 3))

broadcastVar: org.apache.spark.broadcast.Broadcast[Array[Int]] = Broadcast(0)

scala> broadcastVar.value

res0: Array[Int] = Array(1, 2, 3)

So far, if you have any doubts regarding the spark interview questions for beginners, please ask in the comment section below.

Moving forward, let us understand the spark interview questions for experienced candidates

Apache Spark Interview Questions for Experienced

21. How to programmatically specify a schema for DataFrame?

DataFrame can be created programmatically with three steps:

- Create an RDD of Rows from the original RDD;

- Create the schema represented by a StructType matching the structure of Rows in the RDD created in Step 1.

- Apply the schema to the RDD of Rows via createDataFrame method provided by SparkSession.

22. Which transformation returns a new DStream by selecting only those records of the source DStream for which the function returns true?

1. map(func)

2. transform(func)

3. filter(func)

4. count()

The correct answer is c) filter(func).

23. Does Apache Spark provide checkpoints?

This is one of the most frequently asked spark interview questions where the interviewer expects a detailed answer (and not just a yes or no!). Give as detailed an answer as possible here.

Yes, Apache Spark provides an API for adding and managing checkpoints. Checkpointing is the process of making streaming applications resilient to failures. It allows you to save the data and metadata into a checkpointing directory. In case of a failure, the spark can recover this data and start from wherever it has stopped.

There are 2 types of data for which we can use checkpointing in Spark.

Metadata Checkpointing: Metadata means the data about data. It refers to saving the metadata to fault-tolerant storage like HDFS. Metadata includes configurations, DStream operations, and incomplete batches.

Data Checkpointing: Here, we save the RDD to reliable storage because its need arises in some of the stateful transformations. In this case, the upcoming RDD depends on the RDDs of previous batches.

24. What do you mean by sliding window operation?

Controlling the transmission of data packets between multiple computer networks is done by the sliding window. Spark Streaming library provides windowed computations where the transformations on RDDs are applied over a sliding window of data.

25. What are the different levels of persistence in Spark?

DISK_ONLY – Stores the RDD partitions only on the disk

MEMORY_ONLY_SER – Stores the RDD as serialized Java objects with a one-byte array per partition

MEMORY_ONLY – Stores the RDD as deserialized Java objects in the JVM. If the RDD is not able to fit in the memory available, some partitions won’t be cached

OFF_HEAP – Works like MEMORY_ONLY_SER but stores the data in off-heap memory

MEMORY_AND_DISK – Stores RDD as deserialized Java objects in the JVM. In case the RDD is not able to fit in the memory, additional partitions are stored on the disk

MEMORY_AND_DISK_SER – Identical to MEMORY_ONLY_SER with the exception of storing partitions not able to fit in the memory to the disk

26. What is the difference between map and flatMap transformation in Spark Streaming?

| map() | flatMap() |

| A map function returns a new DStream by passing each element of the source DStream through a function func | It is similar to the map function and applies to each element of RDD and it returns the result as a new RDD |

| Spark Map function takes one element as an input process it according to custom code (specified by the developer) and returns one element at a time | FlatMap allows returning 0, 1, or more elements from the map function. In the FlatMap operation |

30. What are the different MLlib tools available in Spark?

- ML Algorithms: Classification, Regression, Clustering, and Collaborative filtering

- Featurization: Feature extraction, Transformation, Dimensionality reduction,

and Selection

- Pipelines: Tools for constructing, evaluating, and tuning ML pipelines

- Persistence: Saving and loading algorithms, models, and pipelines

- Utilities: Linear algebra, statistics, data handling

Hope it is clear so far. Let us know what were the apache spark interview questions ask’d by/to you during the interview process.

How to Buy

The complete Spak Past Questions in PDF Format with accurate answers is N2,000.

To purchase this past question, Please chat with the Whatsapp number 08162517909

to check availability before you proceed to make Payment.

After payment, send the (1) proof of payment, (2) course of study, (3) name of past questions paid for and (4) email address to Ifiokobong (Examsguru) at Whatsapp: 08162517909. We will send the past questions to your email address.

Delivery Assurance

How are you sure we will deliver the past question to you after payment.

Our services are based on honesty and integrity. That is why we are very popular.

For us (ExamsGuru Team), we have been in business since 2012 and have been delivering honest and trusted services to our valued customers.

Since we started, we have not had any negative comments from our customers, instead, all of them are happy with us.

Our past questions and answers are original and from the source. So, your money is in the right hand and we promise to deliver it once we confirm your payment.

Each year, thousands of students gain admission into their schools of choice with the help of our past questions and answers.

7 Tips to Prepare for Spak Exams

- Don’t make reading your hobby: A lot of people put reading as a hobby in their CV, they might be right because they have finished schooling. But “You” are still schooling, so reading should be in top priority and not as a hobby. Read far and wide to enhance your level of aptitude

- Get Exams Preparation Materials: These involve textbooks, dictionaries, Spak Past Questions in PDF Format, mock questions, and others. These materials will enhance your mastering of the scope of the exams you are expecting.

- Attend Extramural Classes: Register and attend extramural classes at your location. This class will not only help you refresh your memory but will boast your classroom understanding and discoveries of new knowledge.

- Sleep when you feel like: When you are preparing for any exams, sleeping is very important because it helps in the consolidation of memory. Caution: Only sleep when you feel like it and don’t oversleep.

- Make sure you are healthy: Sickness can cause excessive feelings of tiredness and fatigue and will not allow you to concentrate on reading. If you are feeling as if you are not well, report to your parent, a nurse, or a doctor. Make sure you are well.

- Eat when you feel like: During the exam preparation period, you are advised not to overeat, so as to avoid sleep. You need to eat little and light food whenever you feel like eating. Eat more fruits, drink milk, and glucose. This will help you to enhance retention.

- Reduce your time in social media: Some people live their entire life on Facebook, Twitter, WhatsApp, Messenger chat. This is so bad and catastrophic if you are preparing for exams. Try and reduce your time spent on social media during this time. Maybe after the exams, you can go back and sleep in it.

If you like these tips, consider sharing them with your friends and relatives. Do you have a question or comments? Put it on the comment form below. We will be pleased to hear from you and help you to score as high as possible.

We wish you good luck!

Check and Confirm: How much is Dollar to Naira Pounds to Naira Rate Today